FMOD Engine User Manual 2.03

- Welcome to the FMOD Engine

- Studio API Guide

- Core API Guide

- What is the Core API

- Linking Plug-ins

- API Features

- Initialization - Simple start up with no configuration necessary

- Audio devices - Automatic detection of device insertion / removal

- Audio devices - support for plug-ins

- File formats - Support for over 20 audio formats built in

- File formats - Support for the most optimal format for games (FSB)

- File formats - Support for plug-ins

- Just play a simple sound - createSound and playSound

- High quality / efficient streaming and compressed samples

- Streaming

- Internet streaming

- Streaming settings

- Compressed sample playback

- Decompressed samples

- Voices / Channels - 'Virtual Voices' - play thousands of sounds at once

- Channels / Grouping - 'Channel Groups' and hierarchical sub-mixing (buses)

- 3D sound and spatialization

- 3D polygon-based geometry occlusion

- Recording - Record to a sound from microphone or line in

- DSP Effects - Support for over 30 special effects built in

- DSP Effects - Reverb types and 3D reverb zones

- DSP Effects - Support for plug-ins

- DSP Engine - Flexible, programmable soft-synth architecture

- Non blocking loads, threads and thread safety

- Performance

- Configuration - memory and file systems

- Controlling a Spatializer DSP

- Upmix/Downmix Behavior

- Advanced Sound Creation

- Extracting PCM Data From a Sound

- Platform Details

- White Papers

- Studio API Reference

- Core API Reference

- FSBank API Reference

- Plug-in API Reference

- Effects Reference

- Troubleshooting

- Glossary

3. Core API Guide

3.1 What is the Core API

The Core API is a programmer API that is intended to cover the basics/primitives of sound. This includes concepts such as 'Channels', 'Sounds', 'DSPs', 'ChannelGroups', 'Sound Groups', 'Recording' and concepts for 3D Sound and occlusion.

As a programmer, you can implement all these features in code, without needing sound designer tools.

3.2 Linking Plug-ins

You can extend the functionality of FMOD through the use of plug-ins. Each plug-in type (codec, DSP and output) has its own API you can use. Whether you have developed the plug-in yourself or you are using one from a third party, there are two ways to integrate it into FMOD.

3.2.1 Static

When the plug-in is available to you as source code, you can hook it up to FMOD by including the source file and using one of the plug-in registration APIs System::registerCodec, System::registerDSP or System::registerOutput. Each of these functions accept the relevant description structure that provides the functionality of the plug-in. By convention plug-in developers will create a function that returns this description structure for you, for example FMOD_AudioGaming_AudioMotors_GetDSPDescription is the name used by one of our parter plug-ins (it follows the form of "FMOD_[CompanyName]_[ProductName]_Get[PluginType]Description"). Alternatively, if you don't have source code, but you do have a static library (such as .lib or .a) it's almost the same process, link the static library with your project, then call the description function passing the value into the registration function.

3.2.2 Dynamic

Another way plug-in code is distributed is via a prebuilt dynamic library (such as .so, .dll or .dylib), these are even easier to integrate with FMOD than static libraries. First ensure the plug-in file is in the working directory of your application, this is often the same location as the application executable. In your code call System::loadPlugin passing in the name of the library and that's all there is to it. Under the hood FMOD will open the library searching for well known functions similar to the description functions mentioned above, once found the plug-in will be registered ready for use.

3.3 API Features

This section will give a broad overview of the Core API's features.

3.3.1 Initialization - Simple start up with no configuration necessary

The Core API has an automatic configuration feature, which makes it simple to start.

At the most basic level, all that is needed to configure the Core API is creating the System object and calling System::init on it. A more detailed description of initialization can be found in the Core API Getting Started white paper.

The sound card can be manually selected, using the System::setDriver function. More settings can be configured, such as the mixing rate of the FMOD system, the resampling method, or the speaker mode with System::setSoftwareFormat. When modifying the mixer settings, this only adjusts the internal mixing format. At the end, the audio stream is always converted to the settings that are set by the user (ie the settings in the control panel in Windows, or the standard 7.1/48khz output mode on Xbox One or PS4).

3.3.2 Audio devices - Automatic detection of device insertion / removal

The Core API has automatic sound card detection and recovery during playback. If a new device is inserted after initialization, FMOD will seamlessly jump to it, assuming it is the higher priority device. An example of this would be a USB headset being plugged in.

If the device that is being played on is removed (such as a USB audio device), it will automatically jump to the device considered next most important (ie on Windows, it would be the new 'default' device).

If a device is inserted, then removed, it will jump to the device it was originally playing on.

The programmer can override the sound card detection behavior, with a custom callback. This is the FMOD_SYSTEM_CALLBACK_DEVICELISTCHANGED callback.

3.3.3 Audio devices - support for plug-ins

The Core API has support for user-created output plug-ins. A developer can create a plug-in to take FMOD audio output to a custom target. This could be a hardware device, or a non standard file/memory/network based system.

An output mode can run in real-time, or non real-time which allows the developer to run FMOD's mixer/streamer/system at faster or slower than real-time rates.

See System::registerOutput documentation for more.

Plug-ins can be created inline with the application, or compiled as a stand-alone dynamic library (ie .dll or .so)

3.3.4 File formats - Support for over 20 audio formats built in

The Core API has native/built in code to support many file formats out of the box. WAV, MP3 and Ogg Vorbis are supported by default, but many more obscure formats like AIFF, FLAC and others. Sequenced formats that are played back in realtime with a real time sequencer, are included. MIDI/MOD/S3M/XM/IT are examples of these.

A more comprehensive list can be found in the FMOD_SOUND_TYPE list.

3.3.5 File formats - Support for the most optimal format for games (FSB)

FMOD also supports an optimal format for games, called FSB (FMOD Sound Bank).

Many audio file formats are not well suited to games. They are not efficient, and can lead to lots of random file access, large memory overhead, and slow load times.

FSB format benefits are:

No-seek loading. FSB loading can be 3 continuous file reads. 1. Main header read. 2. Sub-sound metadata. 3. Raw audio data.

'Memory point' feature. An FSB can be loaded into memory by the user, and simply 'pointed to' so that FMOD uses the memory where it is, and does not allocate extra memory. See FMOD_OPENMEMORY_POINT.

Low memory overhead. A lot of file formats contain 'fluff' such as tags, and metadata. FSB stores information in compressed, bit packed formats for efficiency.

Multiple sounds in 1 file. Thousands of sounds can be stored inside 1 file, and selected by the API function Sound::getSubSound.

Efficient Ogg Vorbis. FSB strips out the 'Ogg' and keeps the 'Vorbis'. 1 codebook can be shared between all sounds, saving megabytes of memory (compared to loading .ogg files individually).

FADPCM codec support. FMOD supports a very efficient, ADPCM variant called FADPCM which is many times faster than a standard ADPCM decoder (no branching), and is therefore very efficient on mobile devices. The quality is also far superior than most ADPCM variants, and lacks the 'hiss' notable in those formats.

3.3.6 File formats - Support for plug-ins

The Core API has support for user created file format plug-ins. A developer can create callbacks for FMOD to call when System::createSound or System::createStream is executed by the user, or when the decoding engine is asking for data.

Plug-ins can be created inline with the application, or compiled as a stand-alone dynamic library (ie .dll or .so)

See the System::registerCodec documentation for more.

3.3.7 Just play a simple sound - createSound and playSound

The simplest way to get started, and to learn the most basic functionality of the Core API, is to initialize the FMOD system, load an audio file, and play it. That's it!

For more information on how to initialize the FMOD Engine and play audio with the Core API, see to the Getting Started white paper.

Look at the play_sound example to refer to sample code for the simple playback of an audio file.

3.3.8 High quality / efficient streaming and compressed samples

The Core API benefits from over 15 years of use, in millions of end user devices, causing the evolution of a highly stable and low latency mixing/streaming engine.

3.3.9 Streaming

Streaming is the ability to take a large audio asset, and read/play it in real time in small chunks at a time, avoiding the need to load the entire asset into memory.

Whether an asset should be streamed is a question of resource availability and allocation. Each currently-playing streaming asset requires constant file I/O (which is to say, access to the disk) and a very small amount of memory for the stream's ring buffer; whereas assets using other loading modes require much more memory but only require file I/O when they’re first loaded, and only require these resources once, no matter how many instances of the asset are playing.

File I/O is a very limited resource on many platforms, and is needed for many things other than audio. Fortunately, most games load much of their content into memory up-front at the start of new levels and areas, and many users do not run applications that constantly access the disk in the background. This means that there are usually periods when the player’s device is not otherwise loading content from disk, meaning that streaming assets that play in those periods can enjoy uncontested and uninterrupted file I/O. By contrast, a streaming asset that plays while the disk is being accessed regularly by anything else may not be able to access to disk frequently enough to refresh its ring buffer, and so may suffer from buffer starvation. Buffer starvation of a streaming asset manifests as the sound stuttering or stopping entirely.

The streaming loading mode is therefore best used for assets that meet the following conditions:

- The asset would take up an inconveniently large amount of memory if fully loaded.

- Few instances of the asset ever need to be playing at the same time.

- Few or no other streaming assets ever need to be playing at the same time as the asset.

- The asset only plays at times when the game does not need to read or write much to the drive on which the asset or its bank file is stored.

Accordingly, streaming is typically reserved for:

- Music

- Voice overs and dialogue

- Long ambiance tracks

To play a Sound as a stream, add the FMOD_CREATESTREAM flag to the System::createSound function, or use the System::createStream function. These options both equate to the same end behavior.

3.3.10 Internet streaming

FMOD streaming supports internet addresses. Supplying http or https in the filename will switch FMOD to streaming using native http, shoutcast or icecast.

Playlist files (such as ASX/PLS/M3U/WAX formats) are supported, including redirection.

Proxy specification and authentication are supported, as well as real-time shoutcast stream switching, metadata retrieval and packet loss notification.

3.3.11 Streaming settings

Streaming behavior can be adjusted in several ways. As streaming a file takes 2 threads, one for file reading, and one for codec decoding/decompression. File buffer sizes can be adjusted with System::setStreamBufferSize and codec decoding buffer size can be adjusted with FMOD_CREATESOUNDEXINFO decodeBufferSize member, or FMOD_ADVANCEDSETTINGS::defaultDecodeBufferSize.

3.3.12 Compressed sample playback

For shorter sounds, rather than decompressing the audio file into memory, the user may wish to play the audio file in memory, as is.

This is more efficient than a stream, as it does not require disk access, or extra threads to read or decode. A stream has a limit of 1 Sound at a time, but a compressed sample does not. It can be played multiple times simultaneously.

If a platform supports a hardware format like AT9 on PS4, or XMA on Xbox One, then it is the best solution to use these codecs, as the decoding of the data is handled by separate media chips, taking the majority of the processing off the CPU.

Refer to the Getting Started white paper on how to use the FMOD_CREATECOMPRESSEDSAMPLE flag and configuration of codec memory.

See the relevant Platform Details section for details on platform specific audio formats.

3.3.13 Decompressed samples

Loading a Sound with System::createSound will by default, cause audio data to be decompressed into memory, and played back as PCM format.

PCM data is just raw uncompressed audio data, for more information see Sample Data.

Decompressed / uncompressed samples uses little to no CPU time to process. PCM data is the same format that the FMOD mixing engine uses, and the audio device itself. This may be desirable, if you have enough memory, on a mobile device with limited CPU cycles.

Decompressed PCM data uses a lot more memory than Vorbis encoded FSB for example. It could be up to 10x more.

A typical use case for mobile developers: Compress the audio data heavily for distribution (to reduce the download size), then decompress it at start-up/load time, to save CPU time, rather than playing it compressed.

3.3.14 Voices / Channels - 'Virtual Voices' - play thousands of sounds at once

The Core API includes a 'virtual voice system.' This system allows you to play hundreds or even thousands of Channels at once, but to only have a small number of them actually producing sound and consuming resources. The others are 'virtual,' emulated with a simple position and audibility update, and so are not heard and don't consume CPU time. For example: A dungeon may have 200 torches burning on the walls in various places, but at any given time only the loudest of these torches are really audible.

FMOD dynamically makes Channels 'virtual' or 'real' depending on real time audibility calculations (based on distance/volume/priority/occlusion). A Channel which is playing far away or with a low volume becomes virtual, and may change back into a real Channel when it comes closer or louder due to Channel or ChannelGroup API calls.

For more information about the virtual voice system, see the Virtual Voice white paper.

3.3.15 Channels / Grouping - 'Channel Groups' and hierarchical sub-mixing (buses)

While it is possible to add the same effect to multiple channels, creating a submix of those channels and adding the effect to that submix requires only a single instance of the effect, and thus consumes fewer resources. This reduces CPU usage greatly.

The primary tool for achieving this in FMOD Studio and the Studio API is the "bus"; in the Core API, it is the "channel group." If multiple channels are routed into the same bus or channel group, the it creates a sub-mix of those signals. If an effect is added to that bus or ChannelGroup, the effect only processes that sub-mix, rather than processing every individual Channel that contributed to it.

The volume of a ChannelGroup can be altered, which allows for master volume groups. The volume is scaled based on a fader DSP inside a ChannelGroup. All Channels and ChannelGroups have a fader DSP by default.

ChannelGroups are hierarchical. ChannelGroups can contain ChannelGroups, which can contain other ChannelGroups and Channels.

Many attributes can be applied to a ChannelGroup, including things like speaker mix, and 3D position. A whole group of Channels, and the ChannelGroups below them, can be positioned in 3D with 1 call, rather than trying to position all of them individually.

'Master Volume', 'SFX Volume' and 'Music Volume' are typical settings in a game. Setting up an 'SFX' ChannelGroup, and a 'Music' ChannelGroup, and having them children of the master ChannelGroup (see System::getMasterChannelGroup)

3.3.16 3D sound and spatialization

The Core API has a variety of features that allow Sounds to be placed in 3D space, so that they move around the listener as part of an environment. These features include panning, pitch shifting with doppler, attenuating with volume scaling, and special filtering.

FMOD 3D spatialization features:

- Multiple attenuation roll-off models. Roll-off is the behavior of the volume of the Sound as it gets closer to the listener or further away. Choose between linear, inverse, linear square, inverse tapered and custom roll-off modes. Custom roll-off allows a FMOD_3D_ROLLOFF_CALLBACK to be set to allow the user to calculate how the volume roll-off happens. If a callback is not convenient, FMOD also allows an array of points that are linearly interpolated between, to denote a 'curve', using ChannelControl::set3DCustomRolloff.

- Doppler pitch shifting. Accurate pitch shifting, controlled by the user velocity setting of the listener and the Channel or ChannelGroup, is calculated and set on the fly by the FMOD 3D spatialization system.

- Vector Based Amplitude Panning (VBAP). This system pans the Sound in the user's speakers in real time, supporting mono, stereo, up to 5.1 and 7.1 surround speaker setups.

- Occlusion. A Sound's underlying Channels or ChannelGroups can have lowpass filtering applied to them to simulate sound going through walls or being muffled by large objects.

- 3D Reverb Zones for reverb panning. See more about this in the 3D Reverb section. Reverb can also be occluded to not go through walls or objects.

- Polygon based geometry occlusion. Add polygon data to FMOD's geometry engine, and FMOD will automatically occlude sound in realtime using raycasting. See more about this in the 3D Polygon based geometry occlusion section.

- Multiple listeners. In a split screen mode game, FMOD can support a listener for each player, so that 3D Sound attenuate correctly.

- Morphing between 2D and 3D with multi-channel audio formats. Sounds can be a point source, or be morphed by the user into 2D audio, which is great for distance based envelopment. The closer a Sound is, the more it can spread into the other speakers, rather than flipping from one side to the other as it pans from one side to the other. See ChannelControl::set3DLevel for the function that lets the user change this mix.

- Stereo and multi-channel audio formats can be used for 3D audio. Typically a mono audio format is used for 3D audio. Multi-channel audio formats can be used to give extra impact. By default multi-channel sample data is collapsed into a mono point source. To 'spread' the multiple channels use ChannelControl::set3DSpread. This can give a more spatial effect for a sound that is coming from a certain direction. A subtle spread of sound in the distance may give the impression of being more effectively spatialized as if it were reflecting off nearby surfaces, or being 'big' and emitting different parts of the sound in different directions.

- Spatialization plug-in support. 3rd party VR audio plug-ins can be used to give more realistic panning over headphones.

To load a Sound as 3D simply add the FMOD_3D flag to the System::createSound function, or the System::createStream function.

The next 3 important things to do are:

- Set the 'listener' position, orientation and velocity once per frame with System::set3DListenerAttributes.

- Set the Channel 3D attributes for handle that was returned from System::playSound, with ChannelControl::set3DAttributes. If 3D positioning of a group of Channels, or a ChannelGroup is required, set the ChannelGroup to be 3D once with ChannelControl::setMode, then call ChannelControl::set3DAttributes instead.

- Call System::update once per frame so the 3D calculations can update based on the positions and other attributes.

Read more about 3D sound in the 3D Sound white paper or the Spatial Audio white paper.

3.3.17 3D polygon-based geometry occlusion

The Core API supports the supply of polygon mesh data that can be processed in real time to create the effect of occlusion in a 3D world. In real world terms, you can stop sounds from traveling through walls, or even confine reverb inside a geometric volume so that it doesn't leak out into other areas.

To use the FMOD Geometry Engine, create a mesh object with System::createGeometry. Then add polygons to each mesh with Geometry::addPolygon. Each object can be translated, rotated and scaled to fit your environment.

3.3.18 Recording - Record to a sound from microphone or line in

The Core API has the ability to record directly from an input into a Sound object. This Sound can then be played back after it has been recorded, or the raw data can be retrieved with Sound::lock and Sound::unlock functions.

The Sound can also be played while it is recording, to allow realtime effects. A simple technique to achieve this is to start recording, then wait a small amount of time (for example, 50 ms), then play the Sound. This keeps the play cursor just behind the record cursor. For information on how to do this and an example of source code, see the "record" example in the /core/examples/bin folder of the FMOD Engine distribution.

3.3.19 DSP Effects - Support for over 30 special effects built in

The Core API has native/built in code to support many special effects out of the box, such as low-pass, compressor, reverb and multiband EQ. A more comprehensive list can be found in the FMOD_DSP_TYPE list.

An effect can be created with System::createDSPByType and added to a Channel or ChannelGroup with ChannelControl::addDSP.

3.3.20 DSP Effects - Reverb types and 3D reverb zones

The Core API has two types of reverb available, and a virtual 3D reverb system which can be used to simulate hundreds of environments or more, with only 1 reverb.

Standard Reverb

A built-in high quality I3DL2 standard compliant reverb, which is used for a fast, configurable environment simulation, and is used for the 3D reverb zone system, described below.

To set an environment simply, use System::setReverbProperties. This lets you set a global environment, or up to 4 different environments, which all Channels are affected by.

Each Channel can have a different reverb wet mix by setting the level in ChannelControl::setReverbProperties.

Read more about the I3DL2 configuration in the Reverb Notes section of the documentation. To avoid confusion when starting out, simply play with the pre-set list of environments in FMOD_REVERB_PRESETS.

Convolution Reverb

There is also an even higher quality Convolution Reverb which allows a user to import an impulse response file (a recording of an impulse in an environment which is used to convolve the signal playing at the time), and have the environment sound like it is in the space the impulse was recorded in.

This is an expensive-to-process effect, so FMOD supports GPU acceleration to offload the processing to the graphics card. This greatly reduces the overhead of the effect, making it almost negligible. GPU acceleration is supported on Xbox One and PS4 platforms. Also hardware acceleration is supported on the PS5 and Xbox Scarlett platforms.

Convolution reverb can be created with System::createDSPByType with FMOD_DSP_TYPE_CONVOLUTIONREVERB and added to a ChannelGroup with ChannelControl::addDSP. It is recommended to only implement one or a limited number of these effects and place them on a sub-mix/group bus (a ChannelGroup), and not per Channel.

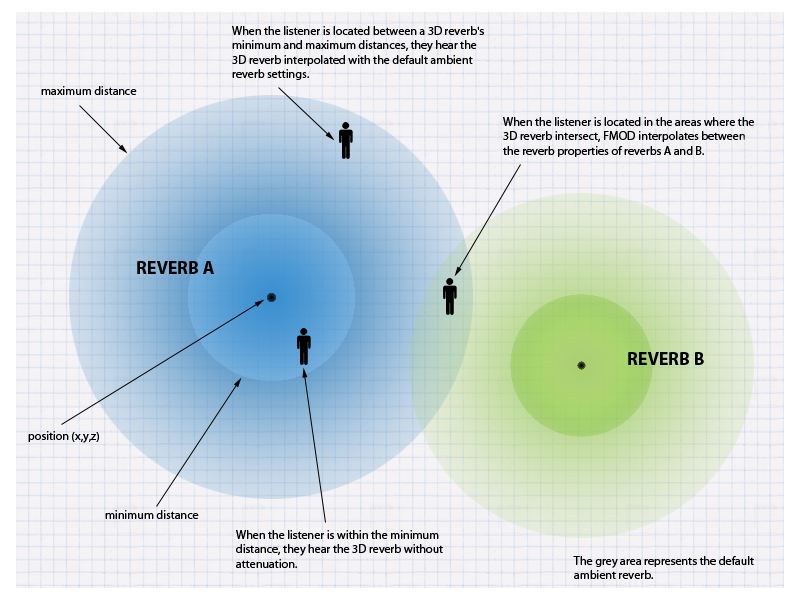

Virtual 3D Reverb System

A Virtual 3D reverb zone system is supported, using the main built-in system I3DL2 reverb.

Virtual '3D reverb spheres' can be created and placed around a 3D world, in unlimited numbers, causing no extra CPU expense.

As the listener travels through these spheres, FMOD will automatically morph and attenuate the levels of the system reverb to make it sound like you are in different environments as you move around the world.

Spheres can be overlapped and based on where the listener is within each sphere. FMOD will morph the reverb to the appropriate mix of environments.

A 3D reverb sphere can be created with System::createReverb3D and the position set with Reverb3D::set3DAttributes. To set a sphere's reverb properties, Reverb3D::setProperties can be used.

For more information on the 3D reverb zone system, and implementation information, read the 3D Reverb white paper.

3.3.21 DSP Effects - Support for plug-ins

The Core API has support for user-created DSP plug-ins. A developer can either load a pre-existing plug-in, or create one inside the application, using 'callbacks'.

Callbacks can be specified by the user, for example when System::createDSP is called, or when the DSP runs and wants to process PCM data inside FMOD's mixer.

Plug-ins can be developed inline with the application, or compiled as a stand-alone dynamic library (ie .dll or .so)

To load a pre-existing plug-in executable, use the System::loadPlugin function.

To implement callbacks directly in a program, System::registerDSP can be used.

To create a stand alone dynamic library, use the same callbacks, but export the symbols through a the FMOD_DSP_DESCRIPTION struct, via the exported FMODGetDSPDescription function.

See the DSP Plug-in API white paper on how to make a plug-in, and /examples/fmod_gain.cpp in the FMOD Engine distribution as a working example.

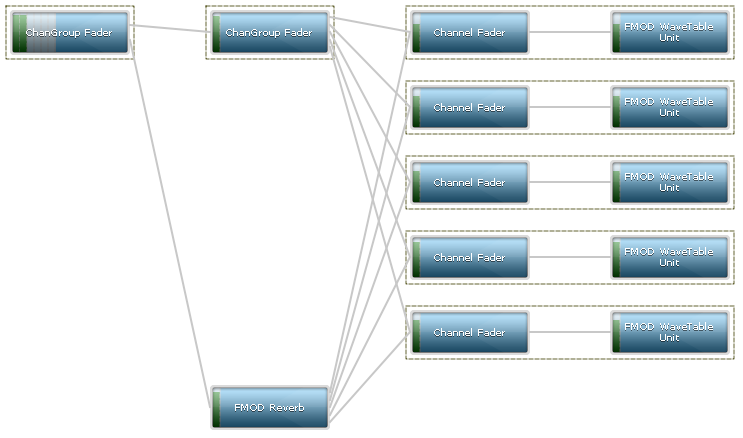

3.3.22 DSP Engine - Flexible, programmable soft-synth architecture

The Core API runs on a modular synth architecture, which allows connections of signal processing nodes (the 'FMOD DSP' concept).

A directed graph processing tree allows the signal to flow from 'generators' at the tail node (a Sound playing through from System::playSound, or a DSP creating sound from System::playDSP for example), to other nodes, mixing together until they reach the head node, where the final result is sent to the sound card.

A visual representation taken directly from the FMOD Profiler.

FMOD typically processes the Sound in the graph, in blocks of 512 samples (10ms) on some platforms, or 1024 on other platforms (21ms). This is the granularity of the system, and affects how smooth parameter changes, such as pitch or volume will heard.

FMOD pre-built DSP effects can be inserted into the graph with functions like DSP::addInput and DSP::disconnectFrom.

For detailed information read the DSP Architecture and Usage white paper.

3.3.23 Non blocking loads, threads and thread safety

Core API commands are thread safe and queued. They get processed either immediately, or in background threads, depending on the command.

By default, things like initialization and loading a Sound are processed on the main thread.

Mixing, streaming, geometry processing, file reading and file loading are or can be done in the background, in background threads. Every effort is made to avoid blocking the main application's loop unexpectedly.

One of the slowest operations is loading a Sound. To place a Sound load into the background so that it doesn't affect processing in the main application thread, the user can use the FMOD_NONBLOCKING flag in System::createSound or System::createStream.

Thread affinity is configurable on some platforms.

For detailed information about FMOD and threads please refer to the Threads and Thread Safety white paper.

3.3.24 Performance

The Core API has evolved over the years to have a comprehensive suite of effects and codecs with minimal overhead for memory and CPU.

All platforms come with performance saving features. For example vector optimized floating point math is used heavily. Some of the technologies used include SSE, NEON, AVX, VMX, and VFP assembler.

Typically the most expensive part of Sound playback is real-time compressed sample playback.

The Core API allows you to configure how many Channels should be audible at once to reduce CPU overhead. As mentioned in the Compressed sample playback section of this document, this is configurable using the System::setAdvancedSettings function.

Adjusting the sample rate quality, resampling quality, number of mixed Channels and decoded Channels is configurable to get the best scalability for your application.

To find out more about configuring FMOD to save CPU time, refer to the CPU Performance white paper, or to get an idea about Core performance figures on various platforms, refer to the Performance Reference section of the documentation.

3.4 Configuration - memory and file systems

The Core API caters to the needs of applications and their memory and file systems. A file system can be 'plugged in' so that FMOD uses it, and not its own system, as well as memory allocation.

To set up a custom file system is a simple process of calling System::setFileSystem.

The file system handles the normal cases of open, read, seek, close, but adds an extra feature which is useful for prioritized/delayed file systems, FMOD supports the FMOD_FILE_ASYNCREAD_CALLBACK callback, for deferred, prioritized loading and reading, which is a common feature in advanced game streaming engines.

An async read callback can immediately return without supplying data, then when the application supplies data at a later time, even in a different thread, it can set the 'done' flag in the FMOD_ASYNCREADINFO structure to get FMOD to consume it. Consideration has to be made to not wait too long or increase stream buffer sizes, so that streams don't audibly stutter/skip.

To set up a custom memory allocator is done by calling Memory_Initialize. This is not an FMOD class member function because it needs to be called before any FMOD objects are created, including the System object.

To read more about setting up memory pools or memory environments, refer to the Memory Management white paper.

3.5 Controlling a Spatializer DSP

Controlling a spatializer DSP using the Core API requires setting the data parameter associated with 3D attributes, this will be a data parameter of type: FMOD_DSP_PARAMETER_DATA_TYPE_3DATTRIBUTES or FMOD_DSP_PARAMETER_DATA_TYPE_3DATTRIBUTES_MULTI. The Studio::System sets this parameter automatically if an Studio::EventInstance position changes, however if using the core System you must set this DSP parameter explicitly.

This will work with our pan DSP, the object panner DSP, the resonance source / soundfield spatializers and any other third party plug-ins that make use of the FMOD spatializers.

Attributes must use a coordinate system with the positive Y axis being up and the positive X axis being right (left-handed coordinate system). FMOD will convert passed in coordinates from right-handed to left-handed for the plug-in if the System was initialized with the FMOD_INIT_3D_RIGHTHANDED flag.

The absolute data for the FMOD_DSP_PARAMETER_3DATTRIBUTES is straight forward, however the relative part requires some work to calculate.

/*

This code supposes the availability of a maths library with basic support for 3D and 4D vectors and 4x4 matrices:

// 3D vector

class Vec3f

{

public:

float x, y, z;

// Initialize x, y & z from the corresponding elements of FMOD_VECTOR

Vec3f(const FMOD_VECTOR &v);

};

// 4D vector

class Vec4f

{

public:

float x, y, z, w;

Vec4f(const Vec3f &v, float w);

// Initialize x, y & z from the corresponding elements of FMOD_VECTOR

Vec4f(const FMOD_VECTOR &v, float w);

// Copy x, y & z to the corresponding elements of FMOD_VECTOR

void toFMOD(FMOD_VECTOR &v);

};

// 4x4 matrix

class Matrix44f

{

public:

Vec4f X, Y, Z, W;

};

// 3D Vector cross product

Vec3f crossProduct(const Vec3f &a, const Vec3f &b);

// 4D Vector addition

Vec4f operator+(const Vec4f &a, const Vec4f &b);

// 4D Vector subtraction

Vec4f operator-(const Vec4f& a, const Vec4f& b);

// Matrix multiplication m * v

Vec4f operator*(const Matrix44f &m, const Vec4f &v);

// 4x4 Matrix inverse

Matrix44f inverse(const Matrix44f &m);

*/

void calculatePannerAttributes(const FMOD_3D_ATTRIBUTES &listenerAttributes, const FMOD_3D_ATTRIBUTES &emitterAttributes, FMOD_DSP_PARAMETER_3DATTRIBUTES &pannerAttributes)

{

// pannerAttributes.relative is the emitter position and orientation transformed into the listener's space:

// First we need the 3D transformation for the listener.

Vec3f right = crossProduct(listenerAttributes.up, listenerAttributes.forward);

Matrix44f listenerTransform;

listenerTransform.X = Vec4f(right, 0.0f);

listenerTransform.Y = Vec4f(listenerAttributes.up, 0.0f);

listenerTransform.Z = Vec4f(listenerAttributes.forward, 0.0f);

listenerTransform.W = Vec4f(listenerAttributes.position, 1.0f);

// Now we use the inverse of the listener's 3D transformation to transform the emitter attributes into the listener's space:

Matrix44f invListenerTransform = inverse(listenerTransform);

Vec4f position = invListenerTransform * Vec4f(emitterAttributes.position, 1.0f);

// Setting the w component of the 4D vector to zero means the matrix multiplication will only rotate the vector.

Vec4f forward = invListenerTransform * Vec4f(emitterAttributes.forward, 0.0f);

Vec4f up = invListenerTransform * Vec4f(emitterAttributes.up, 0.0f);

Vec4f velocity = invListenerTransform * (Vec4f(emitterAttributes.velocity, 0.0f) - Vec4f(listenerAttributes.velocity, 0.0f));

// We are now done computing the relative attributes.

position.toFMOD(pannerAttributes.relative.position);

forward.toFMOD(pannerAttributes.relative.forward);

up.toFMOD(pannerAttributes.relative.up);

velocity.toFMOD(pannerAttributes.relative.velocity);

// pannerAttributes.absolute is simply the emitter position and orientation:

pannerAttributes.absolute = emitterAttributes;

}

When using FMOD_DSP_PARAMETER_3DATTRIBUTES_MULTI, you will need to call calculatePannerAttributes for each listener filling in the appropriate listener attributes.

Set this on the DSP by using DSP::setParameterData with the index of the FMOD_DSP_PARAMETER_DATA_TYPE_3DATTRIBUTES, you will need to check with the author of the DSP for the structure index. Pass the data into the DSP using DSP::setParameterData with the index of the 3D Attributes, FMOD_DSP_PARAMETER_DATA_TYPE_3DATTRIBUTES or FMOD_DSP_PARAMETER_DATA_TYPE_3DATTRIBUTES_MULTI.

3.6 Upmix/Downmix Behavior

FMOD handles downmixing using mix matrices. Below you can find the various mix matrix layouts, with each table representing a separate output format. In each table, speakers in the "Output" column are assigned levels from the incoming speaker formulas in the relevant row, according to the incoming speaker layout. Different mix matrix layouts can be set using ChannelControl::setMixMatrix. See FMOD_SPEAKER and FMOD_SPEAKERMODE for more details on existing speaker layouts.

For an improved result when using 5.1 on a stereo output device,the Dolby Pro Logic II downmix algorithm can be chosen by specifying FMOD_INIT_PREFER_DOLBY_DOWNMIX as an init flag when calling System::init.

| Key | Value |

|---|---|

| M | Mono |

| L | Left |

| R | Right |

| FL | Front Left |

| FR | Front Right |

| C | Center |

| LFE | Low Frequency Effects |

| SL | Surround Left |

| SR | Surround Right |

| BL | Back Left |

| BR | Back Right |

| TFL | Top Front Left |

| TFR | Top Front Right |

| TBL | Top Back Left |

| TBR | Top Back Right |

3.6.1 FMOD_SPEAKERMODE_MONO

| Output | Mono | Stereo | Quad | 5.0 | 5.1 | 7.1 | 7.1.4 |

|---|---|---|---|---|---|---|---|

| M | M | L x 0.707 + R x 0.707 | FL x 0.500 + FR x 0.500 + SL x 0.500 + SR x 0.500 | FL x 0.447 + FR x 0.447 + C x 0.447 + BL x 0.447 + BR x 0.447 | FL x 0.447 + FR x 0.447 + C x 0.447 + BL x 0.447 + BR x 0.447 | FL x 0.378 + FR x 0.378 + C x 0.378 + SL x 0.378 + SR x 0.378 + BL x 0.378 + BR x 0.378 | FL x 0.378 + FR x 0.378 + C x 0.378 + SL x 0.378 + SR x 0.378 + BL x 0.378 + BR x 0.378 |

3.6.2 FMOD_SPEAKERMODE_STEREO

| Output | Mono | Stereo | Quad | 5.0 | 5.1 | 7.1 | 7.1.4 |

|---|---|---|---|---|---|---|---|

| L | M x 0.707 | L | FL + SL x 0.707 | FL + C x 0.707 + BL x 0.707 | FL + C x 0.707 + BL x 0.707 | FL + C x 0.707 + SL x 0.707 + BL x 0.596 | FL + C x 0.707 + SL x 0.707 + BL x 0.596 |

| R | M x 0.707 | R | FR + SR x 0.707 | FR + C x 0.707 + BR x 0.707 | FR + C x 0.707 + BR x 0.707 | FR + C x 0.707 + SR x 0.707 + BR x 0.596 | FR + C x 0.707 + SR x 0.707 + BR x 0.596 |

3.6.3 FMOD_SPEAKERMODE_QUAD

| Output | Mono | Stereo | Quad | 5.0 | 5.1 | 7.1 | 7.1.4 |

|---|---|---|---|---|---|---|---|

| FL | M x 0.707 | L | FL | FL + C x 0.707 | FL + C x 0.707 | FL x 0.965 + FR x 0.258 + C x 0.707 + SL x 0.707 | FL x 0.965 + FR x 0.258 + C x 0.707 + SL x 0.707 |

| FR | M x 0.707 | R | FR | FR + C x 0.707 | FR + C x 0.707 | FL x 0.258 + FR x 0.965 + C x 0.707 + SR x 0.707 | FL x 0.258 + FR x 0.965 + C x 0.707 + SR x 0.707 |

| SL | SL | BL | BL | SL x 0.707 + BL x 0.965 + BR x 0.258 | SL x 0.707 + BL x 0.965 + BR x 0.258 | ||

| SR | SR | BR | BR | SR x 0.707 + BL x 0.258 + BR x 0.965 | SR x 0.707 + BL x 0.258 + BR x 0.965 |

3.6.4 FMOD_SPEAKERMODE_SURROUND

| Output | Mono | Stereo | Quad | 5.0 | 5.1 | 7.1 | 7.1.4 |

|---|---|---|---|---|---|---|---|

| FL | M x 0.707 | L | FL x 0.961 | FL | FL | FL + SL x 0.367 | FL + SL x 0.367 |

| FR | M x 0.707 | R | FR x 0.961 | FR | FR | FR + SR x 0.367 | FR + SR x 0.367 |

| C | C | C | C | C | |||

| BL | FL x 0.274 + SL x 0.960 + SR x 0.422 | BL | BL | SL x 0.930 + BL x 0.700 + BR x 0.460 | SL x 0.930 + BL x 0.700 + BR x 0.460 | ||

| BR | FR x 0.274 + SL x 0.422 + SR x 0.960 | BR | BR | SR x 0.930 + BL x 0.460 + BR x 0.700 | SR x 0.930 + BL x 0.460 + BR x 0.700 |

3.6.5 FMOD_SPEAKERMODE_5POINT1

| Output | Mono | Stereo | Quad | 5.0 | 5.1 | 7.1 | 7.1.4 |

|---|---|---|---|---|---|---|---|

| FL | M x 0.707 | L | FL x 0.961 | FL | FL | FL + SL x 0.367 | FL + SL x 0.367 |

| FR | M x 0.707 | R | FR x 0.961 | FR | FR | FR + SR x 0.367 | FR + SR x 0.367 |

| C | C | C | C | C | |||

| LFE | LFE | LFE | LFE | ||||

| BL | FL x 0.274 + SL x 0.960 + SR x 0.422 | BL | BL | SL x 0.930 + BL x 0.700 + BR x 0.460 | SL x 0.930 + BL x 0.700 + BR x 0.460 | ||

| BR | FR x 0.274 + SL x 0.422 + SR x 0.960 | BR | BR | SR x 0.930 + BL x 0.460 + BR x 0.700 | SR x 0.930 + BL x 0.460 + BR x 0.700 |

3.6.6 FMOD_SPEAKERMODE_7POINT1

| Output | Mono | Stereo | Quad | 5.0 | 5.1 | 7.1 | 7.1.4 |

|---|---|---|---|---|---|---|---|

| FL | M x 0.707 | L | FL x 0.939 | FL | FL | FL | FL |

| FR | M x 0.707 | R | FR x 0.939 | FR | FR | FR | FR |

| C | C | C | C | C | |||

| LFE | LFE | LFE | LFE | ||||

| SL | FL x 0.344 + SL x 0.344 | BL x 0.883 | BL x 0.883 | SL | SL | ||

| SR | FR x 0.344 + SR x 0.344 | BR x 0.883 | BR x 0.883 | SR | SR | ||

| BL | SL x 0.939 | BL x 0.470 | BL x 0.470 | BL | BL | ||

| BR | SR x 0.939 | BR x 0.470 | BR x 0.470 | BR | BR |

3.6.7 FMOD_SPEAKERMODE_7POINT1POINT4

| Output | Mono | Stereo | Quad | 5.0 | 5.1 | 7.1 | 7.1.4 |

|---|---|---|---|---|---|---|---|

| FL | M x 0.707 | L | FL x 0.939 | FL | FL | FL | FL |

| FR | M x 0.707 | R | FR x 0.939 | FR | FR | FR | FR |

| C | C | C | C | C | |||

| LFE | LFE | LFE | LFE | ||||

| SL | FL x 0.344 + SL x 0.344 | BL x 0.883 | BL x 0.883 | SL | SL | ||

| SR | FR x 0.344 + SR x 0.344 | BR x 0.883 | BR x 0.883 | SR | SR | ||

| BL | SL x 0.939 | BL x 0.470 | BL x 0.470 | BL | BL | ||

| BR | SR x 0.939 | BR x 0.470 | BR x 0.470 | BR | BR | ||

| TFL | TFL | ||||||

| TFR | TFR | ||||||

| TBL | TBL | ||||||

| TBR | TBR |

3.7 Advanced Sound Creation

FMOD has a number of FMOD_MODE modes for Sound creation that require the use of FMOD_CREATESOUNDEXINFO to specify various properties of the sound, such as the data format, frequency, length, callbacks, and so on. The following details how to use these modes, and provides basic examples of creating a sound using each mode.

3.7.1 Creating a Sound from memory

FMOD_OPENMEMORY

FMOD_OPENMEMORY causes FMOD to interpret the first argument of System::createSound or System::createStream as a pointer to memory instead of a filename. FMOD_CREATESOUNDEXINFO::length is used to specify the length of the sound, specifically the amount of memory in bytes the sound's data occupies. This data is copied into FMOD's buffers and can be freed after the sound is created. If using FMOD_CREATESTREAM, the data is instead streamed from the buffer pointed to by the pointer you passed in, so you should ensure that the memory isn't freed until you have finished with and released the stream.

FMOD::Sound *sound;

FMOD_CREATESOUNDEXINFO exinfo;

void *buffer = 0;

int length = 0;

//

// Load your audio data to the "buffer" pointer here

//

// Create extended sound info struct

memset(&exinfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

exinfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // Size of the struct.

exinfo.length = length; // Length of sound - PCM data in bytes

system->createSound((const char *)buffer, FMOD_OPENMEMORY, &exinfo, &sound);

// The audio data pointed to by "buffer" has been duplicated into FMOD's buffers, and can now be freed

// However, if loading as a stream with FMOD_CREATESTREAM or System::createStream, the memory must stay active, so do not free it!

FMOD_Sound *sound;

FMOD_CREATESOUNDEXINFO exinfo;

void *buffer = 0;

int length = 0;

//

// Load your audio data to the "buffer" pointer here

//

// Create extended sound info struct

memset(&exinfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

exinfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // Size of the struct.

exinfo.length = length; // Length of sound - PCM data in bytes

FMOD_System_CreateSound(system, (const char *)buffer, FMOD_OPENMEMORY, &exinfo, &sound);

// The audio data pointed to by "buffer" has been duplicated into FMOD's buffers, and can now be freed

// However, if loading as a stream with FMOD_CREATESTREAM or System::createStream, the memory must stay active, so do not free it!

FMOD.Sound sound;

FMOD.CREATESOUNDEXINFO exinfo;

byte[] buffer;

//

// Load your audio data to the "buffer" array here

//

// Create extended sound info struct

exinfo = new FMOD.CREATESOUNDEXINFO();

exinfo.cbsize = Marshal.SizeOf(typeof(FMOD.CREATESOUNDEXINFO));

exinfo.length = (uint)bytes.Length;

system.createSound(buffer, FMOD.MODE.OPENMEMORY, ref exinfo, out sound);

// The audio data stored by the "buffer" array has been duplicated into FMOD's buffers, and can now be freed

// However, if loading as a stream with FMOD_CREATESTREAM or System::createStream, you must pin "buffer" with GCHandle so that it stays active

var sound = {};

var outval = {};

var buffer;

//

// Load your audio data to a Uint8Array and assign it to "buffer" var here

//

// Create extended sound info struct

// No need to define cbsize, the struct already knows its own size in JS

var exinfo = FMOD.CREATESOUNDEXINFO();

exinfo.length = buffer.length; // Length of sound - PCM data in bytes

system.createSound(buffer.buffer, FMOD.OPENMEMORY, exinfo, outval);

sound = outval.val;

// The audio data stored in the "buffer" var has been duplicated into FMOD's buffers, and can now be freed

// However, if loading as a stream with FMOD_CREATESTREAM or System::createStream, the memory must stay active, so do not free it!

FMOD_OPENMEMORY_POINT

FMOD_OPENMEMORY_POINT also causes FMOD to interpret the first argument of System::createSound or System::createStream as a pointer to memory instead of a filename. However, unlike FMOD_OPENMEMORY, FMOD will use the memory as is instead of copying it to its own buffers. As a result, you may only free the memory after Sound::release is called. FMOD_CREATESOUNDEXINFO::length is used to specify the length of the sound, specifically the amount of memory in bytes the sound's data occupies.

FMOD::Sound *sound;

FMOD_CREATESOUNDEXINFO exinfo;

void *buffer = 0;

int length = 0;

//

// Load your audio data to the "buffer" pointer here

//

// Create extended sound info struct

memset(&exinfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

exinfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // Size of the struct

exinfo.length = length; // Length of sound - PCM data in bytes

system->createSound((const char *)buffer, FMOD_OPENMEMORY_POINT, &exinfo, &sound);

// As FMOD is using the data stored at the buffer pointer as is, without copying it into its own buffers, the memory cannot be freed until after Sound::release is called

FMOD_Sound *sound;

FMOD_CREATESOUNDEXINFO exinfo;

void *buffer = 0;

int length = 0;

//

// Load your audio data to the "buffer" pointer here

//

// Create extended sound info struct

memset(&exinfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

exinfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // Size of the struct

exinfo.length = length; // Length of sound - PCM data in bytes

FMOD_System_CreateSound(system, (const char *)buffer, FMOD_OPENMEMORY_POINT, &exinfo, &sound);

// As FMOD is using the data stored at the buffer pointer as is, without copying it into its own buffers, the memory cannot be freed until after Sound::release is called

FMOD.Sound sound

FMOD.CREATESOUNDEXINFO exinfo;

byte[] buffer;

GCHandle gch;

//

// Load your audio data to the "buffer" array here

//

// Pin data in memory so a pointer to it can be passed to FMOD's unmanaged code

gch = GCHandle.Alloc(buffer, GCHandleType.Pinned);

// Create extended sound info struct

exinfo = new FMOD.CREATESOUNDEXINFO();

exinfo.cbsize = Marshal.SizeOf(typeof(FMOD.CREATESOUNDEXINFO)); // Size of the struct

exinfo.length = (uint)bytes.Length; // Length of sound - PCM data in bytes

system.createSound(gch.AddrOfPinnedObject(), FMOD.MODE.OPENMEMORY_POINT, ref exinfo, out sound);

// As FMOD is using the data stored at the buffer pointer as is, without copying it into its own buffers, the memory must stay active and pinned

// Unpin memory with gch.Free() after Sound::release has been called

Not supported for JavaScript.

3.7.2 Creating a Sound from PCM data

FMOD_OPENRAW

FMOD_OPENRAW causes FMOD to ignore the format of the provided audio file, and instead treat it as raw PCM data. Use FMOD_CREATESOUNDEXINFO to specify the frequency, number of channels, and data format of the file.

FMOD::Sound *sound;

FMOD_CREATESOUNDEXINFO exinfo;

// Create extended sound info struct

memset(&exinfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

exinfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // Size of the struct

exinfo.numchannels = 2; // Number of channels in the sound

exinfo.defaultfrequency = 44100; // Playback rate of sound

exinfo.format = FMOD_SOUND_FORMAT_PCM16; // Data format of sound

system->createSound("./Your/File/Path/Here.raw", FMOD_OPENRAW, &exinfo, &sound);

FMOD_Sound *sound;

FMOD_CREATESOUNDEXINFO exinfo;

// Create extended sound info struct

memset(&exinfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

exinfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // Size of the struct

exinfo.numchannels = 2; // Number of channels in the sound

exinfo.defaultfrequency = 44100; // Default playback rate of sound

exinfo.format = FMOD_SOUND_FORMAT_PCM16; // Data format of sound

FMOD_System_CreateSound(system, "./Your/File/Path/Here.raw", FMOD_OPENRAW, &exinfo, &sound);

FMOD.Sound sound

FMOD.CREATESOUNDEXINFO exinfo;

// Create extended sound info struct

exinfo = new FMOD.CREATESOUNDEXINFO();

exinfo.cbsize = Marshal.SizeOf(typeof(FMOD.CREATESOUNDEXINFO)); // Size of the struct

exinfo.numchannels = 2; // Number of channels in the sound

exinfo.defaultfrequency = 44100; // Default playback rate of sound

exinfo.format = FMOD.SOUND_FORMAT.PCM16; // Data format of sound

system.createSound("./Your/File/Path/Here.raw", FMOD.MODE.OPENRAW, ref exinfo, out sound);

var sound = {};

var outval = {};

var exinfo = FMOD.CREATESOUNDEXINFO();

// Create extended sound info struct

// No need to define cbsize, the struct already knows its own size in JS

exinfo.numchannels = 2; // Number of channels in the sound

exinfo.defaultfrequency = 44100; // Default playback rate of sound

exinfo.format = FMOD.SOUND_FORMAT.PCM16; // Data format of sound

system.createSound("./Your/File/Path/Here.raw", FMOD.OPENRAW, exinfo, outval);

sound = outval.val;

3.7.3 Creating a Sound by manually providing sample data

FMOD_OPENUSER

FMOD_OPENUSER causes FMOD to ignore the first argument of System::createSound or System::createStream, and instead create a static sample or stream to which you must manually provide audio data. Use FMOD_CREATESOUNDEXINFO to specify the frequency, number of channels, and data format. You can optionally provide a read callback, which is used to place your own audio data into FMOD's buffers. If no read callback is provided, the sample will be empty, so Sound::lock and Sound::unlock must be used to provide audio data instead.

FMOD::Sound *sound;

FMOD_CREATESOUNDEXINFO exinfo;

// Create extended sound info struct

memset(&exinfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

exinfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // Size of the struct

exinfo.numchannels = 2; // Number of channels in the sound

exinfo.defaultfrequency = 44100; // Default playback rate of sound

exinfo.length = exinfo.defaultfrequency * exinfo.numchannels * sizeof(signed short) * 5; // Length of sound - PCM data in bytes. 5 = seconds

exinfo.format = FMOD_SOUND_FORMAT_PCM16; // Data format of sound

exinfo.pcmreadcallback = MyReadCallbackFunction; // To read sound data, you must specify a read callback using the pcmreadcallback field

// Alternatively, use Sound::lock and Sound::unlock to submit sample data to the sound when playing it back

// As sample data is being loaded via callback or Sound::lock and Sound::unlock, pass null or equivalent as first argument

system->createSound(0, FMOD_OPENUSER, &exinfo, &sound);

FMOD_Sound *sound;

FMOD_CREATESOUNDEXINFO exinfo;

// Create extended sound info struct

memset(&exinfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

exinfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // Size of the struct

exinfo.numchannels = 2; // Number of channels in the sound

exinfo.defaultfrequency = 44100; // Default playback rate of sound

exinfo.length = exinfo.defaultfrequency * exinfo.numchannels * sizeof(signed short) * 5; // Length of sound - PCM data in bytes. 5 = seconds

exinfo.format = FMOD_SOUND_FORMAT_PCM16; // Data format of sound

exinfo.pcmreadcallback = MyReadCallbackFunction; // To read sound data, you must specify a read callback using the pcmreadcallback field

// Alternatively, use Sound::lock and Sound::unlock to submit sample data to the sound when playing it back

// As sample data is being loaded via callback or Sound::lock and Sound::unlock, pass null or equivalent as second argument

FMOD_System_CreateSound(system, NULL, FMOD_OPENUSER, &exinfo, &sound);

FMOD.Sound sound

FMOD.CREATESOUNDEXINFO exinfo;

// Create extended sound info struct

exinfo = new FMOD.CREATESOUNDEXINFO();

exinfo.cbsize = Marshal.SizeOf(typeof(FMOD.CREATESOUNDEXINFO)); // Size of the struct

exinfo.numchannels = 2; // Number of channels in the sound

exinfo.defaultfrequency = 44100; // Default playback rate of sound

exinfo.length = exinfo.defaultfrequency * exinfo.numchannels * sizeof(short) * 5; // Length of sound - PCM data in bytes. 5 = seconds

exinfo.format = FMOD.SOUND_FORMAT.PCM16; // Data format of sound

exinfo.pcmreadcallback = MyReadCallbackFunction; // To read sound data, you must specify a read callback using the pcmreadcallback field

// Alternatively, use Sound::lock and Sound::unlock to submit sample data to the sound when playing it back

// As sample data is being loaded via callback or Sound::lock and Sound::unlock, pass null or equivalent as first argument

system.createSound("", FMOD.MODE.OPENUSER, ref exinfo, out sound);

var sound = {};

var outval = {};

var exinfo = FMOD.CREATESOUNDEXINFO();

// Create extended sound info struct

// No need to define cbsize, the struct already knows its own size in JS

exinfo.numchannels = 2; // Number of channels in the sound

exinfo.defaultfrequency = 44100; // Default playback rate of sound

exinfo.length = exinfo.defaultfrequency * exinfo.numchannels * 2 * 5; // Length of sound - PCM data in bytes. 2 = sizeof(short) and 5 = seconds

exinfo.format = FMOD.SOUND_FORMAT.PCM16; // Data format of sound

exinfo.pcmreadcallback = MyReadCallbackFunction; // To read sound data, you must specify a read callback using the pcmreadcallback field

// Alternatively, use Sound::lock and Sound::unlock to submit sample data to the sound when playing it back

// As sample data is being loaded via callback or Sound::lock and Sound::unlock, pass null or equivalent as first argument

system.createSound("", FMOD.OPENUSER, exinfo, outval);

sound = outval.val;

3.8 Extracting PCM Data From a Sound

The following demonstrates how to extract PCM data from a Sound and place it into a buffer using Sound::readData. FMOD_OPENONLY must be used for the mode argument of System::createSound to ensure that file handle remains open for reading, as other modes will automatically read the entire file's data and close the file handle. Additionally, Sound::seekData can be used to seek within the Sound's data before reading into a buffer.

FMOD::Sound *sound;

unsigned int length;

char *buffer;

system->createSound("drumloop.wav", FMOD_OPENONLY, nullptr, &sound);

sound->getLength(&length, FMOD_TIMEUNIT_RAWBYTES);

buffer = new char[length];

sound->readData(buffer, length, nullptr);

delete[] buffer;

FMOD_SOUND *sound;

unsigned int length;

char *buffer;

FMOD_System_CreateSound(system, "drumloop.wav", FMOD_OPENONLY, 0, &sound);

FMOD_Sound_GetLength(sound, &length, FMOD_TIMEUNIT_RAWBYTES);

buffer = (char *)malloc(length);

FMOD_Sound_ReadData(sound, (void *)buffer, length, 0);

free(buffer);

FMOD.Sound sound;

uint length;

byte[] buffer;

system.createSound("drumloop.wav", FMOD.MODE.OPENONLY, out sound);

sound.getLength(out length, FMOD.TIMEUNIT.RAWBYTES);

buffer = new byte[(int)length];

sound.readData(buffer);

var sound = {};

var length = {};

var buffer = {};

system.createSound("drumloop.wav", FMOD.OPENONLY, null, sound);

sound = sound.val;

sound.getLength(length, FMOD.TIMEUNIT_RAWBYTES);

length = length.val;

sound.readData(buffer, length, null);

buffer = buffer.val;

See Also: FMOD_TIMEUNIT, FMOD_MODE, Sound::getLength, System::createSound. Sound::seekData