60 lines

2.2 KiB

Markdown

60 lines

2.2 KiB

Markdown

Chat locally with any PDF

|

|

|

|

Ask questions, get answer with usefull references

|

|

|

|

Work well with math pdfs (convert them to LaTex, a math syntax comprehensible by computer)

|

|

|

|

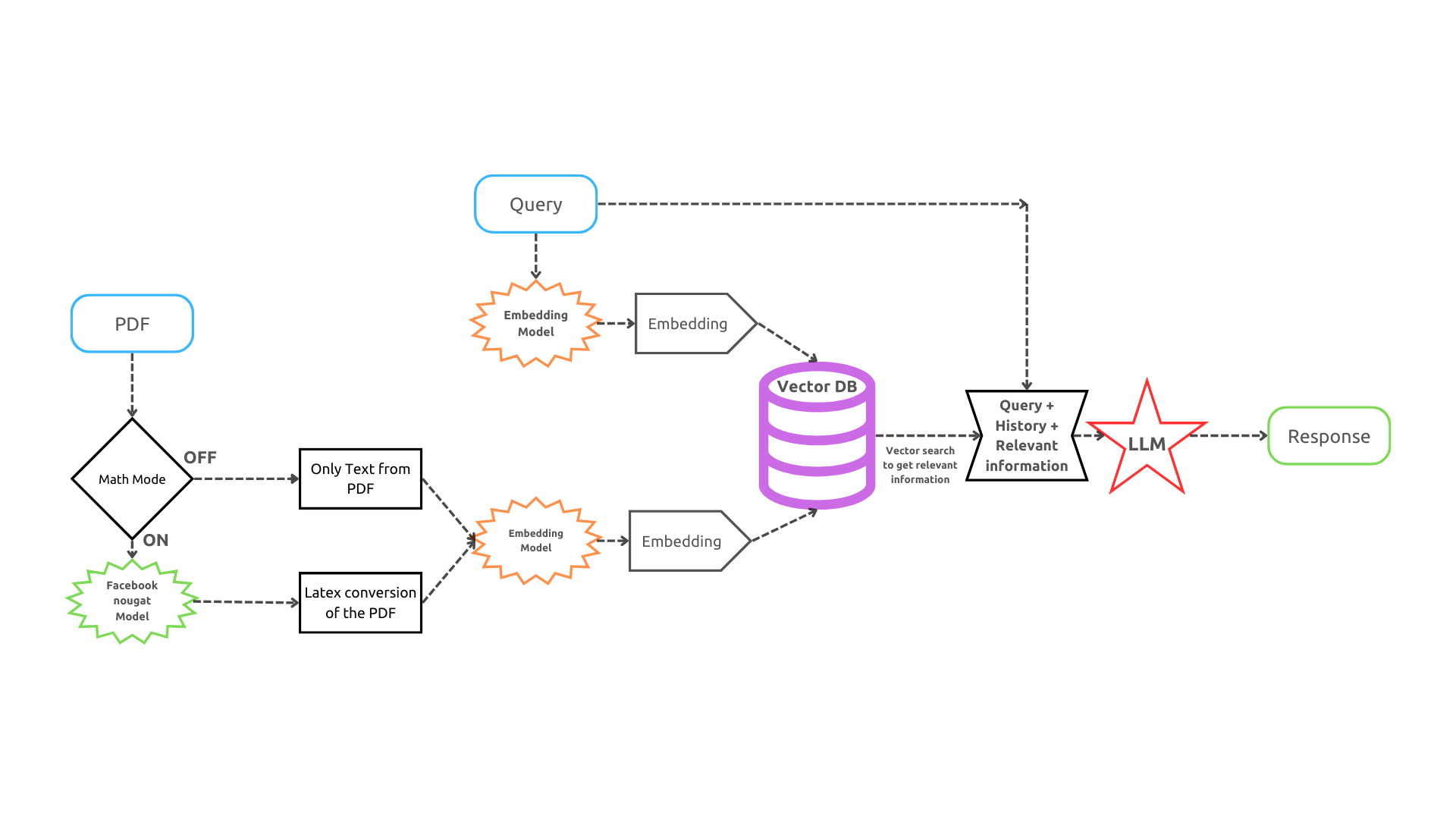

## Work flow chart

|

|

|

|

|

|

|

|

## Demos

|

|

|

|

chatbot test with some US Laws pdf

|

|

|

|

<video src="https://git.rufous-trench.ts.net/Crizomb/Medias/raw/branch/main/ai_pdf_test_law.mp4" controls></video>

|

|

|

|

chatbot test with math pdf (interpereted as latex by the LLM)

|

|

|

|

<video src="https://git.rufous-trench.ts.net/Crizomb/Medias/raw/branch/main/ai_pdf_test_math.mp4" controls></video>

|

|

|

|

full length process of converting pdf to latex, then using the chat bot

|

|

|

|

<video src="https://git.rufous-trench.ts.net/Crizomb/Medias/raw/branch/main/ai_pdf_full_length.mp4" controls></video>

|

|

|

|

|

|

|

|

## How to use

|

|

|

|

* Clone the project to some location that we will call 'x'

|

|

* install requierements listed in the requirements.txt file

|

|

* (open terminal, go to the 'x' location, run pip install -r requirements.txt)

|

|

* ([OPTIONAL] for better performance during embedding, install pytorch with cuda, go to https://pytorch.org/get-started/locally/)

|

|

|

|

* Put your pdfs in x/ai_pdf/documents/pdfs

|

|

* Run x/ai_pdf/front_end/main.py

|

|

* Select or not math mode

|

|

* Choose the pdf you want to work on (those documents must be on x/ai_pdf/documents/pdfs to work well)

|

|

* Wait a little bit for the pdf to get vectorized (check task manager to see if your gpu is going vrum)

|

|

|

|

* Launch LM Studio, Go to the local Server tab, choose the model you want to run, choose 1234 as server port, start server

|

|

* (If you want to use open-ai or any other cloud LLM services, change line 10 of x/ai_pdf/back_end/inference.py with your api_key and your provider url)

|

|

|

|

* Ask questions to the chatbot

|

|

* Get answer

|

|

* Go eat cookies

|

|

|

|

|

|

### TODO

|

|

|

|

- [ ] Option tabs

|

|

- [ ] add more different embedding models

|

|

- [ ] add menu to choose how many relevant chunk of information the vector search should get from the vector db

|

|

- [ ] menu to configure api url and api key

|

|

|

|

## Maybe in the futur

|

|

|

|

- [ ] Add special support for code PDF (with specialized langchain code spliter)

|

|

- [ ] Add Multimodality

|

|

|

|

|